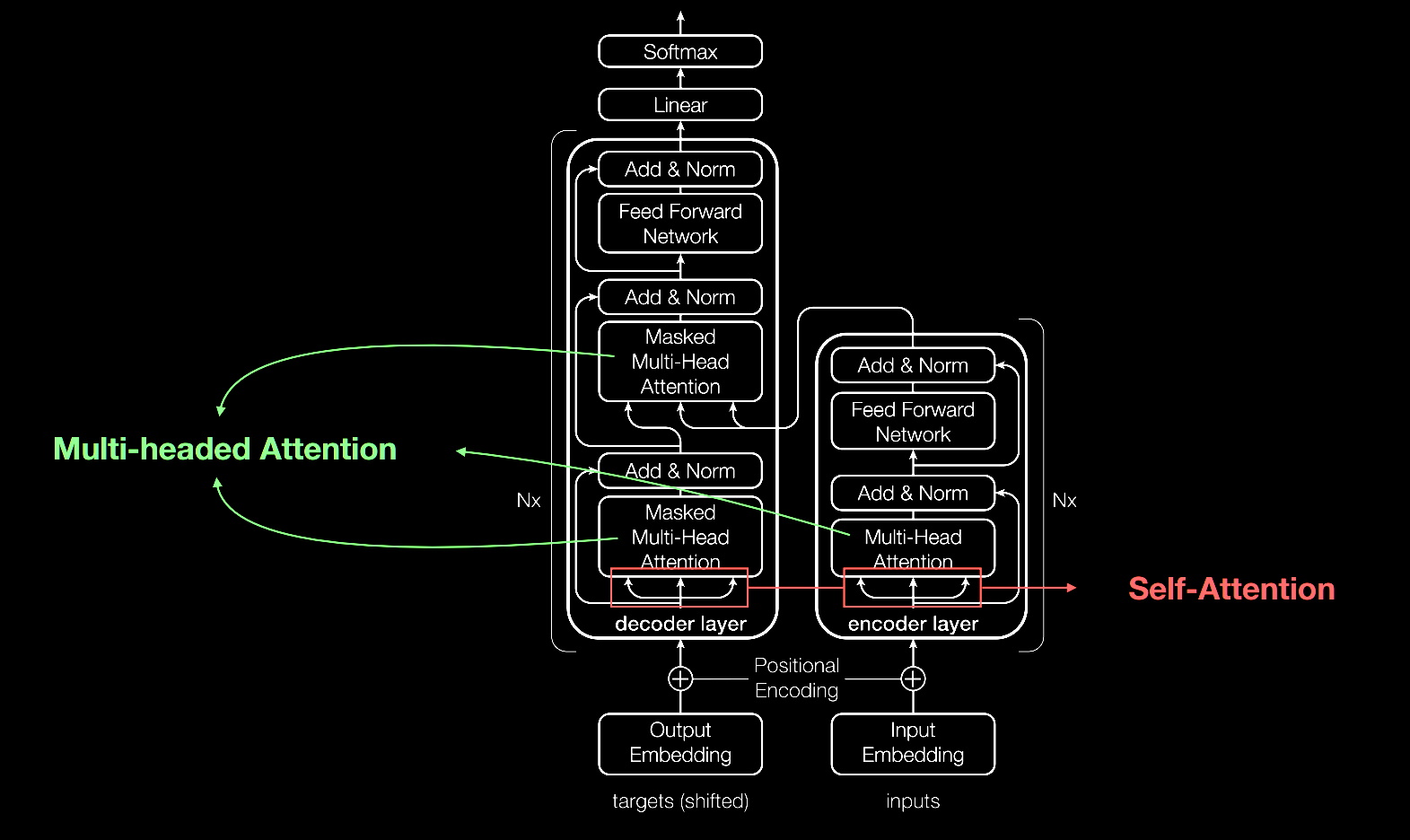

The transformer is a type of artificial neural network architecture that was introduced in 2017. It is primarily used for natural language processing tasks, such as machine translation, text summarization, and language modeling. The transformer is an encoder-decoder model, which means that it has two main components: an encoder that processes the input sequence and a decoder that generates the output sequence.

One of the key innovations of the transformer is the use of self-attention mechanisms, which allow the model to learn global dependencies between the input and output sequences. This is in contrast to previous models that relied on recurrent neural networks (RNNs) and convolutional neural networks (CNNs), which were limited to learning local dependencies.

The transformer uses a multi-headed self-attention mechanism, which allows the model to attend to different parts of the input sequence at the same time. This allows the model to learn more complex relationships between the input and output sequences, and to generate more accurate translations.

The transformer also uses a technique called masking, which allows the model to "ignore" certain parts of the input sequence. This is useful for tasks like machine translation, where the model needs to focus on certain words or phrases in the input sequence, but not on others.

One of the main advantages of the transformer over previous models is its parallelizability. Because the self-attention mechanism allows the model to learn global dependencies, the transformer can be trained on multiple GPUs in parallel, which greatly speeds up training times.

In addition to its use in natural language processing tasks, the transformer has also been applied to other domains, such as computer vision and reinforcement learning. In computer vision, the transformer has been used for tasks like image recognition and object detection. In reinforcement learning, the transformer has been used for tasks like game playing and robot control.

Overall, the transformer has proven to be a powerful and effective model for natural language processing and other tasks. Its ability to learn global dependencies and its parallelizability make it a valuable tool for researchers and practitioners working in the field of artificial intelligence.

One of the key factors in the success of the transformer is its ability to capture long-term dependencies between words in a sentence. Traditional RNNs and LSTMs have difficulty learning these dependencies, as they are limited to capturing information from a fixed-sized window of words. The transformer, on the other hand, uses a self-attention mechanism to learn these dependencies, allowing it to capture information from the entire input sequence.

The transformer also makes use of skip connections, which allow the model to bypass some of the intermediate layers in the network. This helps to reduce the vanishing gradient problem, which occurs when the gradients in a network become very small and the model is unable to learn effectively. Skip connections also help the model to incorporate information from different layers of the network, allowing it to make more accurate predictions.

Another important aspect of the transformer is its use of positional encoding, which allows the model to learn the relative positions of words in a sentence. This is important because the meaning of a sentence often depends on the order of the words, and the transformer needs to be able to capture this information in order to generate accurate translations.

In conclusion, the transformer is a powerful and effective model for natural language processing tasks. Its ability to learn long-term dependencies, its use of skip connections and positional encoding, and its parallelizability make it a valuable tool for researchers and practitioners working in the field of artificial intelligence.

You must be logged in to post a comment.